Dealing with Delay in Wireless Hearing Systems

This post describes my presentation “Delay-tolerant signal processing for next-generation wireless hearing systems” presented at the International Hearing-Aid Research Conference (IHCON) in August 2024; and my paper “Mixed-delay distributed beamforming for own-speech separation in hearing devices with wireless remote microphones” presented at the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA) in October 2023.

Intro

People with hearing loss struggle most in noisy, reverberant environments – like crowded restaurants and chaotic group fitness classes – and hearing aids don’t do much to help. Wireless assistive listening devices, like lapel microphones clipped to dining partners or headsets worn by fitness instructors, can provide dramatic benefits by transmitting clean, clear sound directly to the user’s ears. Our group has been exploring immersive remote microphone systems for group conversations, including some systems that can use digital devices like smartphones that users probably already have with them.

Digital wireless technology, especially widely used standards like WiFi and Bluetooth, could make assistive listening more accessible. There is one big problem, through: Delay.

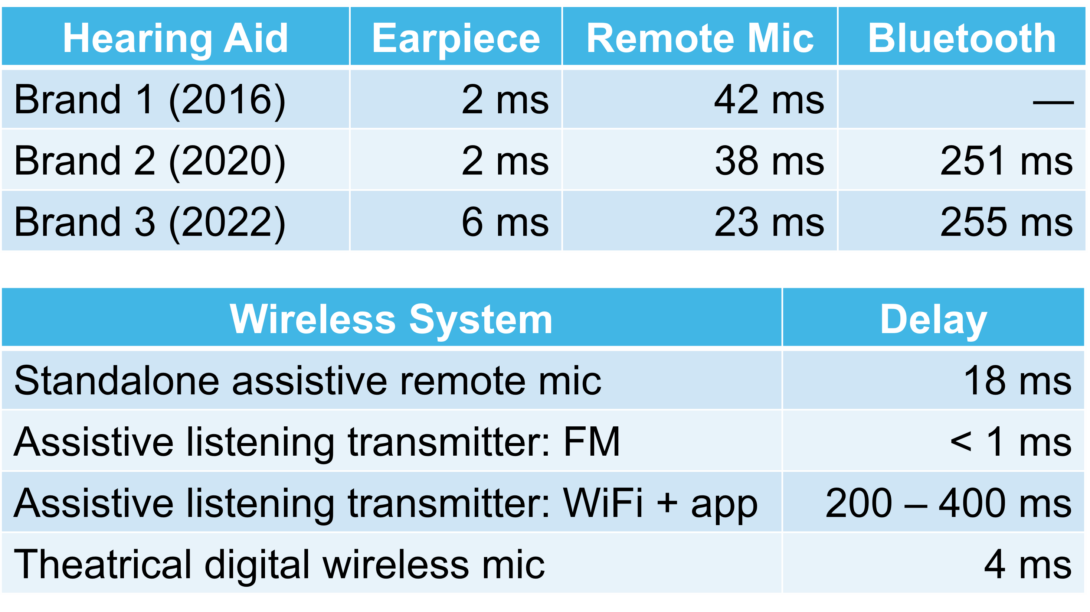

Most hearing aids have delays of just a few milliseconds. Longer delays can be noticeable and distracting. Delay is particularly harmful if the user hears their own voice echoed back with a delay: More than a few milliseconds of delay can make it difficult to speak.

This delay requirement is a major constraint on hearing aid performance. Signal processing algorithms, from classic filters to modern deep learning methods, do better if they are allowed longer delay. Advanced algorithms like noise reduction, source separation, and dereverberation would work better if they could delay the signal by 50 or 100 ms, but that would be unacceptable for listeners.

Delay

Delays for digital wireless systems are often much longer than those in hearing aids; for example, the remote microphone that comes with my hearing aids has a delay of around 40 ms, which is very distracting. Wi-Fi and Bluetooth streaming can be even worse, up to hundreds of milliseconds. Newer wireless standards like Bluetooth Low Energy Audio are expected to lower this delay, but still not to the level of a hearing aid. Analog broadcast systems are virtually instantaneous, and professional-grade digital wireless microphones like the kind used in theaters can have very low delay, but these are not as convenient and accessible as consumer technologies.

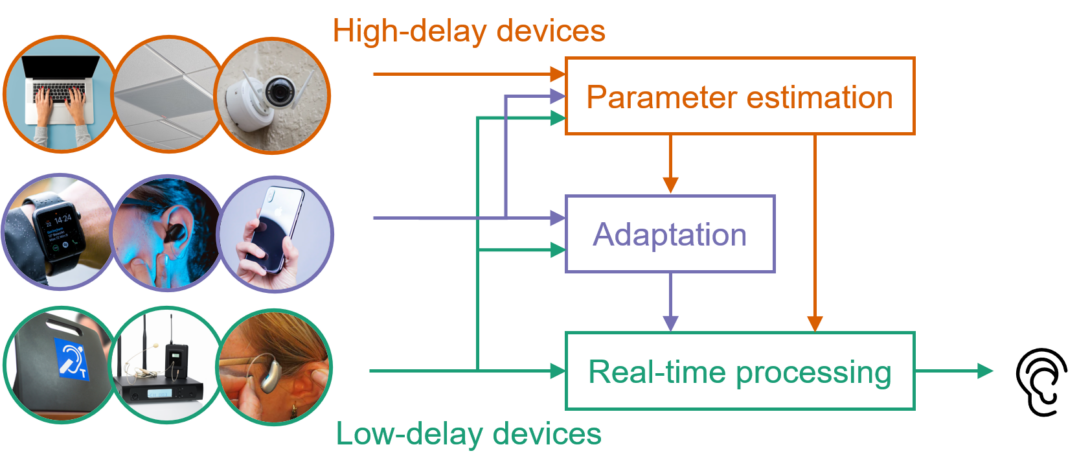

Our research group is developing strategies to use these wireless devices to make it easier to hear even if they have large delay. This will allow us to take advantage of the growing connectivity of hearing aids and consumer electronics. In my presentation at IHCON 2024, I highlighted two of these delay-robust processing strategies.

Cooperative Processing

One strategy is cooperative processing, using the delayed devices to support the main hearing aids. One or more wireless systems can help the hearing aids to learn how many and what kinds of sounds are in the room and, critically, where they are.

For example, in a paper at Interspeech 2022, we showed that earbuds can be used to help a microphone array “lock on” to different talkers, separating them from background noise better than it could on its own.

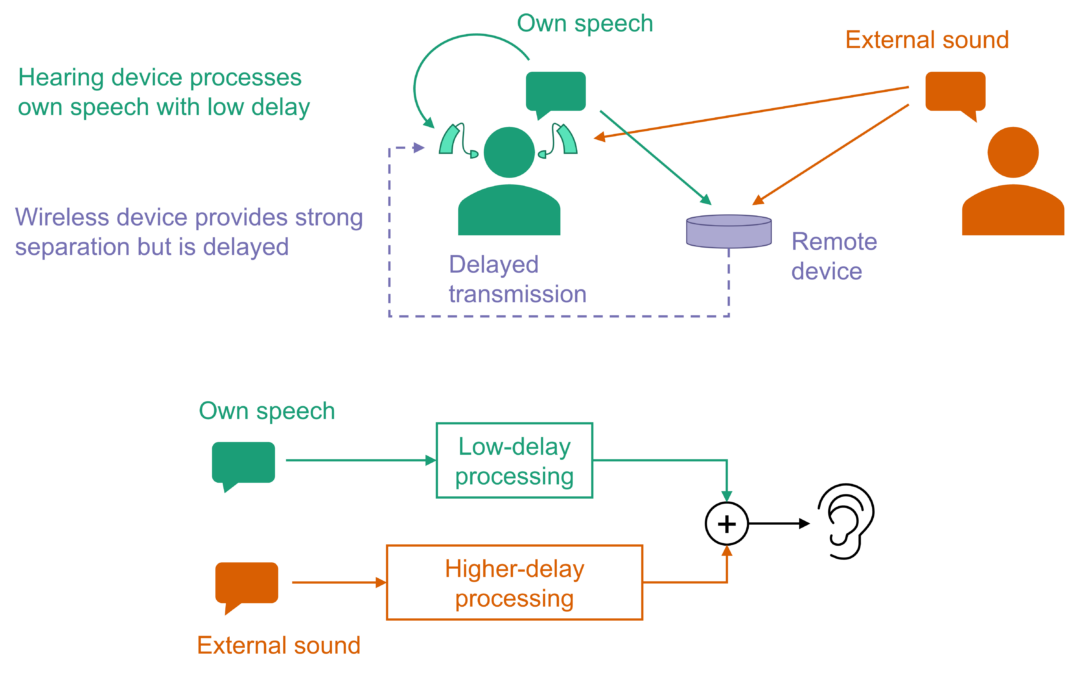

Mixed-Delay Processing

A second strategy is to process different sounds with different delays. Not all sounds need to be processed within a few milliseconds. We know that hearing aid users are most sensitive to hearing their own voice echoed back with a delay. But if someone is talking from the other side of a big, echoey room, the listener could probably tolerate more delay. These distant sounds, including background noise, are the most difficult to process and would benefit most from larger delay.

In my WASPAA 2024 paper, I proposed a mixed-delay beamforming system that uses a simple, low-delay fixed beamformer to roughly separate the user’s own speech from outside sounds. Then, it uses a more complicated, higher-delay algorithm and data from delayed wireless devices to process external sounds. This structure is inspired by the generalized sidelobe canceller (GSC), a famous algorithm for adaptive beamforming.

A key takeaway from this paper is that the low-delay beamformer doesn’t need to work very well. Because the user’s own speech is already much louder in the hearing aid than it is in a distant wireless device, the system can work with just a few decibels of beamforming gain. It is the high-delay separation algorithm that needs to perform well to suppress own-speech echo and enhance external sounds. That’s good because the higher-delay algorithm can use more complicated processing and can benefit from delayed data from wireless devices.

In the future, our group plans to combine and build on both of these ideas to develop delay-tolerant processing architectures that use different devices in different ways depending on their delay, and apply different delay constraints to different sounds. By processing signals on multiple timescales, we can overcome the limitations of digital wireless delay and take full advantage of the growing connectivity of hearing aids to make it easier to hear in noise.