Group Conversation Enhancement

This post accompanies two presentations titled "Immersive Conversation Enhancement Using Binaural Hearing Aids and External Microphone Arrays" and "Group Conversation Enhancement Using Wireless Microphones and the Tympan Open-Source Hearing Platform", which were presented at the International Hearing Aid Research Conference (IHCON) in August 2022. The latter is part of a special session on open-source hearing tools.

Group conversation enhancement

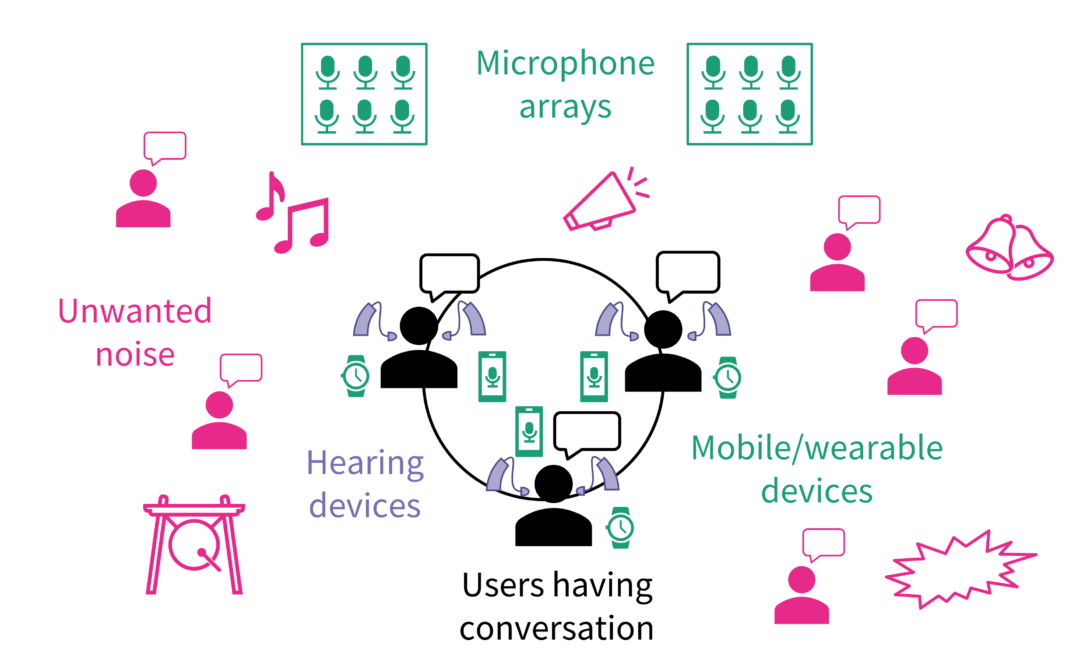

Have you ever struggled to hear the people across from you in a crowded restaurant? Group conversations in noisy environments are among the most frustrating hearing challenges, especially for people with hearing loss, but conventional hearing devices don’t do much to help. They make everything louder, including the background noise. Our research group is developing new methods to make it easier to hear in loud noise. In this project, we focus on group conversations, where there are several users who all want to hear each other.

A group conversation enhancement system should turn up the voices of users in the group while tuning out background noise, including speech from other people nearby. To do that, it needs to separate the speech of group members from that of non-members. It should handle multiple talkers at once, in case people interrupt or talk over each other. To help listeners keep track of fast-paced conversations, it should sound as immersive as possible. Specifically, it should have imperceptible delay and it should preserve spatial cues so that listeners can tell what sound is coming from what direction. And it has to do all that while all the users are constantly moving, such as turning to look at each other while talking.

A hearing device on its own can’t distinguish speech from group members and non-members, much less enhance it while keeping up with rapid motion. To do that, we need help from extra devices that can isolate the talkers we want to hear. We will explore three strategies for tuning out background noise: using dedicated microphones clipped to each talker; using a large microphone array that can capture sound from a distance; and using mobile and wearable devices that users already have with them.

Once we have those low-noise signals from the talkers we care about, we need to process them to sound natural to the listener. Ideally, the output of the conversation enhancement should sound like what the earpiece microphones would have captured if there was no one else in the room. Last year, we proposed an adaptive signal processing method that matches the spatial cues of the low-noise signals to the sound at the earpieces. We will use that same method again here.

Remote Microphones

Wireless microphones are already widely used in classroom settings to transmit sound directly from the teacher to a student’s hearing device. Similar products are available for adults, but they aren’t very popular, in part because they can only be used with one talker at a time. Last year, we demonstrated a system for combining two or more wireless microphones for group conversations. It works even when multiple people talk over each other, and it preserves spatial cues to help listeners track the conversation. We implemented a two-talker version of the system in real time using the Tympan open-source hearing platform. The video below, originally presented at the Acoustical Society of America Meeting, demonstrates how the algorithm reduces noise while preserving spatial cues.

Microphone Arrays

It is often impractical to ask conversation partners to wear wireless microphones. Instead, we can use a microphone array to capture sound from a distance; that process is known as beamforming. Microphone arrays are commonly used in consumer devices such as smart speakers and, increasingly, in room infrastructure to support remote work and teaching. For example, our building’s main conference room has three microphone-array-equipped ceiling tiles. If these could be connected to hearing devices in the room, they could help people hear better during crowded events.

As part of our second talk at IHCON this week, we demonstrated a conversation enhancement system using a microphone array placed in the middle of a dining table. A group of three users wearing earpieces had a conversation while moving naturally, for example turning to look at each other. A set of three pre-calibrated beams isolate the talkers’ voices and send the enhanced data to the listening devices. The video below presents the audio from one user’s perspective as he turns to look at the other two talkers.

Cooperative Processing

Wireless microphones and microphone arrays both require users to deal with extra devices, which is likely part of the reason existing products are not more widely used. But in a typical group conversation, there are already several microphones spread among the group members. We could enhance the conversation without any extra gadgets by connecting the devices that users already have, including hearing devices, smartphones, and other wearables. They can share data with each other to achieve much better noise reduction than any device could alone.

We can demonstrate a simple version of cooperative processing by directly connecting two Tympan devices. The Tympan has a built-in microphone, so it can be used as a remote microphone with no extra hardware required. We can connect the line out of one Tympan to the line in of the other. The video below shows how the Tympan can be used as an immersive remote microphone. Only one Tympan was available when this demo was made, so it only shows one side of the conversation.

With fast wireless connections, we could also use smartphones as part of a cooperative processing network. Next month at the International Conference on Acoustic Signal Enhancement (IWAENC), we will present a method for combining earpieces with mobile devices placed on a table. It uses more complex adaptive algorithms to reduce noise and track motion. We demonstrate it for a group of three moving human talkers, just like in the array demonstration above.

Scaling up conversation enhancement

Ideally, group conversation enhancement would work with any number of users. The Tympan only has four analog input and output channels, so to scale the conversation enhancement system for larger groups, we will need to develop other means for the devices to share signals, such as by digital communication.

For closely spaced group conversations where all the microphones pick up sound from all the talkers, we also need a reliable way to tell which user is speaking when. That process is called voice activity detection (VAD). Normal earpiece microphones work surprisingly poorly for VAD because they don’t capture very much of their wearer’s voice at high frequencies. Lapel microphones and tabletop microphones are slightly better, but only when the talkers are spread far apart. Fortunately, hearing devices and high-tech earbuds include many other sensors, such as contact microphones that pick up vibrations through the body. These microphones have poor sound quality, but because they don’t capture sound from the air, they are very good at rejecting external noise.

The Tympan earpieces do not have any contact microphones built in, so we used an off-the-shelf throat microphone instead. The video below compares the sound from the contact microphone to that from the Tympan’s built-in microphone. The contact microphone sounds terrible in quiet, but it’s mostly unaffected by noise. It can therefore be used to detect who is talking when and update the adaptive filters accordingly.