Enhancing Group Conversations with Smartphones and Hearing Devices

This post describes our paper “Adaptive Crosstalk Cancellation and Spatialization for Dynamic Group Conversation Enhancement Using Mobile and Wearable Devices,” presented at the International Workshop on Acoustic Signal Enhancement (IWAENC) in September 2022.

Group Conversation Enhancement

One of the most common complaints from people with hearing loss – and everyone else, really – is that it’s hard to hear in noisy places like restaurants. Group conversations are especially difficult since the listener needs to keep track of multiple people who sometimes interrupt or talk over each other. Conventional hearing aids and other listening devices don’t work well for noisy group conversations. Our team is developing systems to help people hear better in group conversations by connecting hearing devices with other nearby devices. Previously, we showed how wireless remote microphone systems can be improved to support group conversations and how a microphone array can enhance talkers in the group while removing outside noise. But both of those approaches rely on specialized hardware, which isn’t always practical. What if we could build a system using devices that users already have with them?

In this work, we enhance a group conversation by connecting together the hearing devices and mobile phones of everyone in the group. Each user wears a pair of earpieces – which could be hearing aids, “hearables”, or wireless earbuds – and places their mobile phone on the table in front of them. The earpieces and phones all transmit audio data to each other, and we use adaptive signal processing to generate an individualized sound mixture for each user. We want each user to be able to hear every other user in the group, but not background noise from other people talking nearby. We also want to remove echoes of the user’s own voice, which can be distracting. And as always, we want to preserve spatial cues that help users tell which direction sound is coming from. Those spatial cues are especially important for group conversations where multiple people might talk at once.

Adaptive crosstalk cancellation and spatialization

The basic principle of the system is simple: Each user places their smartphone in front of them on the table, and the smartphones pick up their voices. Because the phones are close to their respective users, they can capture speech with relatively little noise. A feature like this is already available in some commercial products, such as the Apple iPhone and AirPods, for one-on-one conversations. Group conversations are more difficult, though, because the users tend to sit close together. That means each device picks up speech from all the talkers in the group. Audio engineers call that “crosstalk”, and it can lead to distortion effects when different versions of the same sound are mixed together. For hearing devices, it can also cause the user to hear a delayed echo of their own voice, which can be very distracting and can even make it difficult to speak.

The usual solution to crosstalk and own-voice echo is to mute all but one microphone at a time. That can be done either manually, as in theatrical productions, or automatically using a voice activity detector (VAD). If a user’s microphone is only on when they’re speaking, then we don’t need to worry about crosstalk. The problem is that there can be a delay between when a user starts talking and when their microphone turns on, which causes the listener to miss the first few syllables. Furthermore, in a noisy environment like a restaurant, frequent muting and unmuting will cause the background noise to cut in and out, which can be more annoying than the noise itself. And of course, muting requires that only one person is talking at a time, which might be true for a panel discussion, but not for a dinner party.

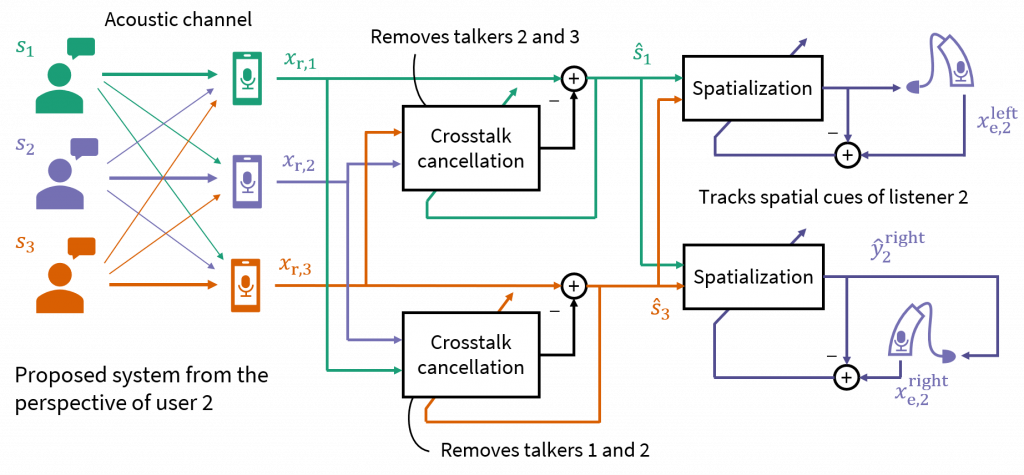

Instead of muting the microphones, our proposed system uses adaptive crosstalk cancellation to remove the voices of all other users from each mobile device. If I’m eating dinner with two other people, then my phone will try to focus on my voice and cancel out the voices of the other two people. Likewise, their phones will try to cancel out my voice so that I don’t hear an echo of myself when I talk. Like a muting system, this cancellation system is only active while the system thinks the user isn’t talking. Unlike in a muting system, the talker’s voice won’t be cut off if the voice activity detector makes a mistake.

After crosstalk cancellation, the phone signals have a good signal-to-noise ratio, but they don’t have the correct spatial cues. Without further processing, the system would sound like being on a conference call rather than talking face-to-face. To make the system immersive, the enhanced phone signals are sent to each user’s hearing device for spatialization. The spatialization filters, which are personalized for each listener, alter the phone signals to match the signals at the two ears. It should sound like the listener is hearing through their own ears, but with less noise. The filters adapt over time as the listener moves their head and as the talkers move around.

Demonstration

We tested the system using real-life recordings from three people sitting around a table talking to each other. A set of speakers placed around the room play speech recordings to simulate background noise. Each user wears a pair of earbuds with binaural microphones. In the paper, we simulated mobile devices using studio microphones placed on the table, but in the demo video below, we used real smartphones sitting in front of each user. We also present sound captured from lapel microphones, which can perform better than smartphones if the users are willing to carry extra devices. The experiment included dozens of microphones to capture data for other research, but for this demonstration, the only other microphones we used were contact microphones that perform voice activity detection; in a real product, they would be replaced by bone conduction microphones in the earbuds.

The demo video below is shown from Kanad’s perspective. You’ll be seeing through a camera mounted on his head and listening to the output of his hearing device. Listen through headphones to hear the spatial cues change as he turns his head.

We plan to continue to refine the system to improve noise reduction and to better track motion when the users move around quickly. We also need to improve its robustness to delay since current consumer wireless standards, like Bluetooth, are too slow for a real-time listening system. With further refinement, we hope that this system will let people hear much better in restaurants and other noisy environments using the devices they already have with them.