Improving Machine Learning for Electric Guitars

This post describes two recent papers by our collaborator Hegel Pedroza: “Guitar-TECHS: An Electric Guitar Dataset Covering Techniques, Musical Excerpts, Chords and Scales Using a Diverse Array of Hardware,” presented at ICASSP 2025 [full text on arXiv], and “Leveraging Real Electric Guitar Tones and Effects to Improve Robustness in Guitar Tablature Transcription Modeling,” presented at DAFx 2024 [full text on arXiv].

Introduction

Machine learning is a powerful tool for producing and studying music. For example, we can use it for automatic music transcription, where we take a music recording and analyze it to create sheet music, MIDI files, or other symbolic representations; performance generation, where computer software creates new music; or timbre transfer, where we change a recording to make one instrument or artist sound like another. Over the last year, we have been collaborating with Hegel Pedroza, a researcher and musician at the National Autonomous University of Mexico, on a project to improve machine learning performance for electric guitars. The project also includes Wallace Abreu from the Federal University of Rio de Janeiro and Iran Roman from Queen Mary University London.

As in all areas of machine learning, music analysis and generation systems rely on large, high-quality data sets. For guitar music, we need both audio recordings and tablature annotations, which capture the positions of the fingers on the guitar strings over time. Most guitar datasets, like the popular GuitarSet, are captured in carefully controlled conditions like a recording studio. However, real guitar music uses a huge variety of guitar types, recording equipment, and acoustic environments: An electric guitar recorded in your living room with a smartphone sounds very different from an acoustic guitar in a recording booth. Hegel has been working to make machine learning tools more reliable in these real-world conditions.

Guitar-TECHS: A new electric guitar dataset

To improve the performance and robustness of machine learning for guitar music, we recently introduced a new dataset called Guitar-TECHS, which features over five hours of guitar music with a wide variety of guitar techniques, chords, scales, and musical excerpts performed by three professional guitarists with different instruments, hardware setups, and recording environments. The rich musical content includes:

- Techniques: Alternate Picking, Palm Mute, Vibrato, Harmonics, Pinch Harmonics, Bendings.

- Chords: Various triads (major, minor, augmented, diminished) and seventh chords (major 7, minor 7, dominant 7, minor 7 flat 5).

- Scales: Major scales in all twelve keys, ascending and descending box patterns.

- Musical Excerpts: Full-length, original guitar solos showcasing diverse playing styles and articulation techniques.

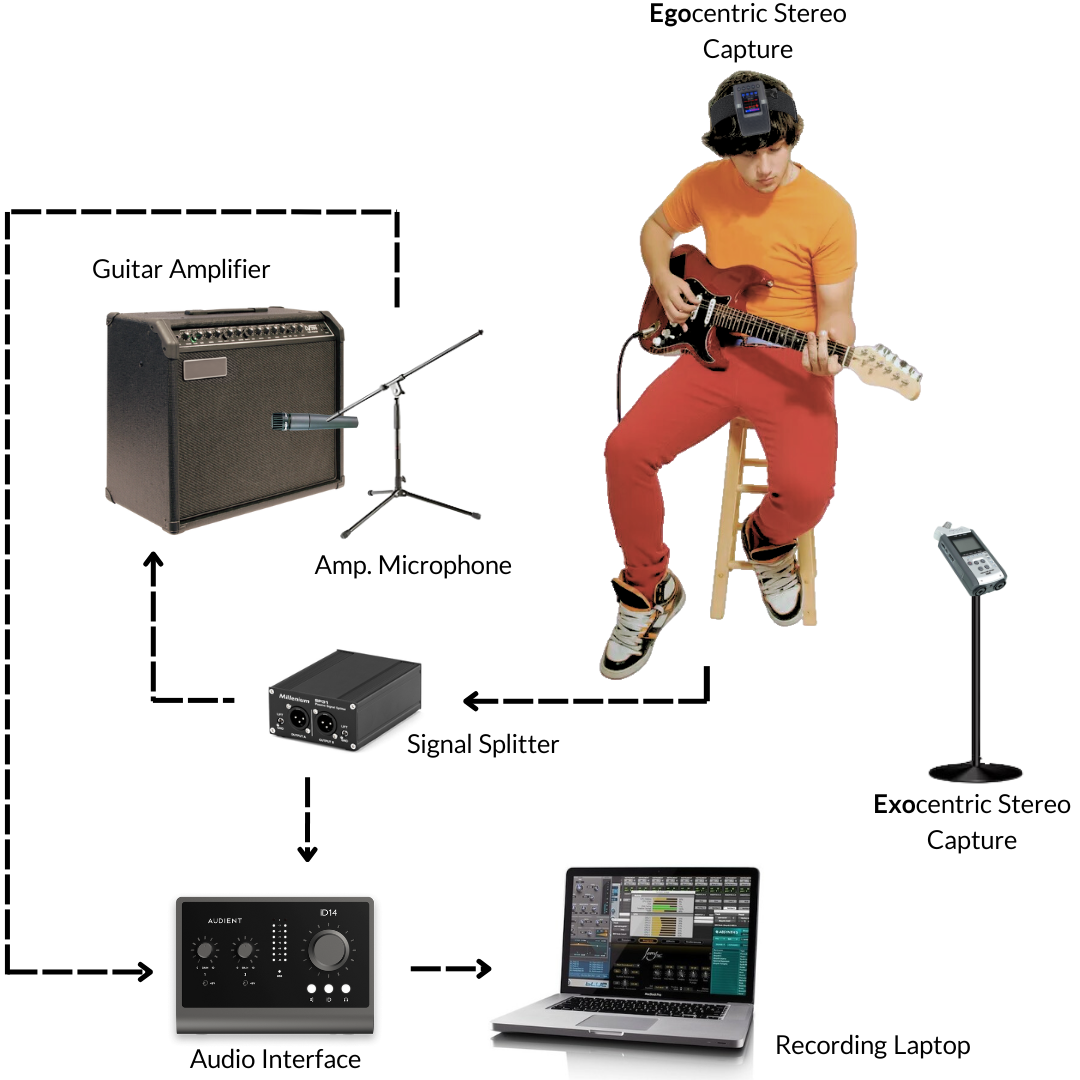

To support a variety of research applications, the dataset features multi-perspective recordings from several microphones: an egocentric head-mounted stereo microphone, an exocentric microphone five feet in front of the performer, and a microphone in front of the guitar amplifier, as well as the audio signal from the guitar itself. Furthermore, a multi-track MIDI pickup captures the onset and duration of each note played on each string. The signals and annotations are carefully synchronized and time-aligned to provide reliable training data for transcription.

The Guitar-TECHS dataset was announced last week at ICASSP 2025 and is now freely available under a CC-BY 4.0 license. For more information, see the Guitar-TECHS website.

Guitar tablature transcription

To demonstrate the value of the new dataset, Hegel applied it to the task of guitar tablature transcription (GTT), which is a form of automatic music transcription. Unlike standard Western sheet music notation, tablatures illustrate finger placements on the guitar. Hegel based his experiments on TabCNN, a machine learning model for GTT based on a convolutional neural network architecture. The neural network takes the music signal as input and produces tablature labels as outputs. To train the neural network, we need both audio recordings and corresponding tablature annotations, like MIDI data captured by a pickup.

He compared performance of the TabCNN model trained on GuitarSet alone with its performance using both GuitarSet and the new Guitar-TECHS dataset. He found that the expanded dataset produced modest improvements in multi-pitch accuracy and tablature precision. More importantly, it showed a notable improvement when tested on recordings that used different hardware and recording conditions compared to both GuitarSet and Guitar-TECHS. This demonstrates that the extra data improved robustness and generalization ability.

Improving tablature transcription with data augmentation

Even with the new data in Guitar-TECHS, there is still a relatively small amount of high-quality, annotated guitar data available for training machine learning algorithms. To make guitar tablature transcription more reliable for a wide variety of guitars, recording equipment, and environments, we can use a technique called data augmentation during training. The key idea is to combine real data with carefully designed synthetic data in different combinations. For example, some research in automatic music transcription has used music synthesizer software to generate artificial music to help with training.

In a paper presented last year at the International Conference on Digital Audio Effects (DAFx), Hegel proposed a new approach to data augmentation using real electric guitar tones and effects rather than software synthesizers. He used existing tablatures, but replaced their associated recordings with simulated performances created using randomly selected tones from pre-recorded guitar notes. These recordings used a variety of effects hardware to produce different timbre qualities.

To test how well the augmented method works on new data, Hegel created another original electric guitar dataset, EGSet12, with twelve original solo electric guitar performances covering a broad range of musical styles. The recording setup is very different from the setups used for the training data, making it valuable for assessing robustness and generalization. The results showed that the augmentation strategy has little effect on data that closes matches the training set, but produces notable improvement on the new dataset, demonstrating improved generalization.

The video below shows examples of guitar tablature transcription with and without the expanded training data.